This post is the result of a lot of thinking. It’s not very uplifting, but it is where I am right now.

It is presented in two versions: the first is written entirely by yours truly, and the second is constructed from the same ideas, as written by an LLM.

Why? No idea. Just seemed like an interesting experiment.

Drew McCormack

I’ve been more depressed in the last two months than at any time I can remember. Sure, it was triggered by Trump, but it’s bigger than that…

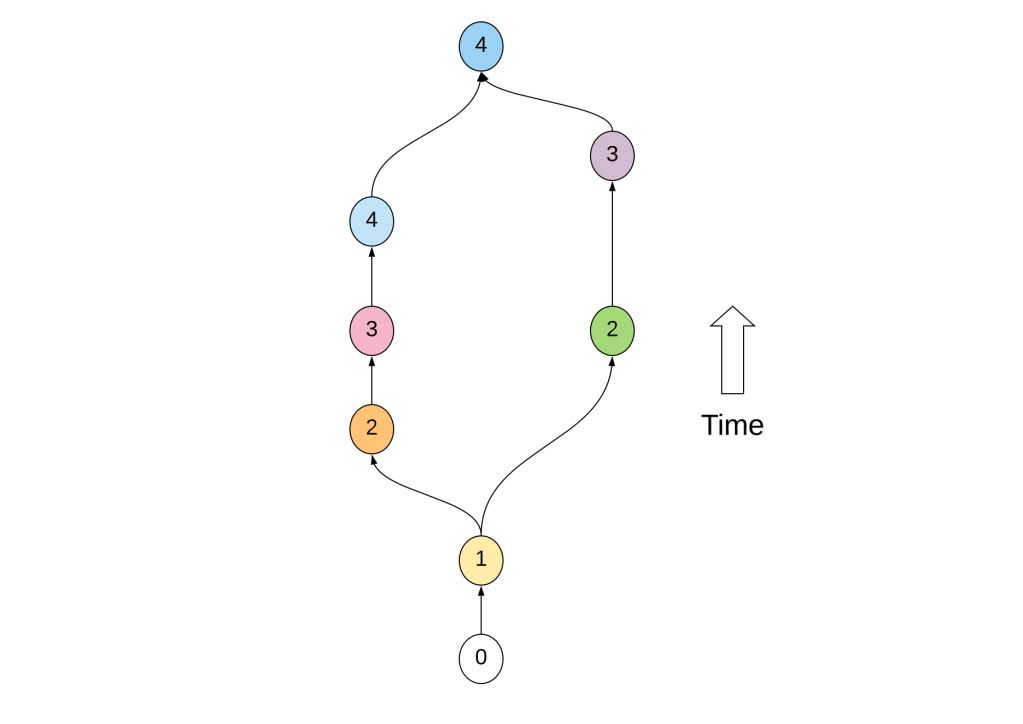

It feels like Democracy is failing. It started with the 2016 election, but a lot of us were not really paying attention, and just thought of it as an odd detour in history.

Early days

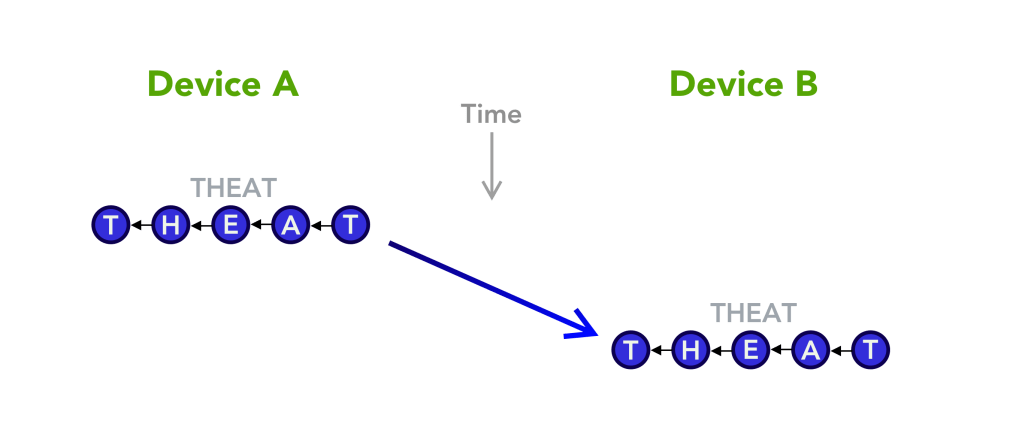

It wasn’t. The Russians and others discovered something important: you can program social media like you can program a machine.

It’s as close as you can get to mind control: Feed them lies. Feed them the truth. Feed them things they like. Feed them things they hate. Measure, optimize, rinse and repeat. You can literally calibrate a society to your goals!

Those were the early days, when you still needed software developers to write the code for the bots, and people to setup fake accounts. LLMs have made that ancient history.

Where we are at

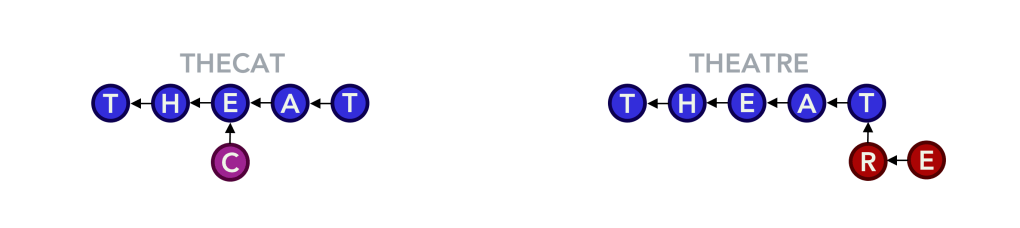

Today’s machine is much more advanced. It can manipulate voters to swing elections, and — in a bizarre non-linear way — it can even alter the social media networks themselves through political pressure.

And you don’t need AGI (Artificial General Intelligence) to do it. The current state of the art is sufficient. Hybrid intelligence. The bionic brain. A human enhanced by an LLM.

An LLM buddy

I’m not just spouting hatred about something I have no experience with. I’ve spent the last few months working almost exclusively with LLMs.

Working with an LLM as a programmer is an entirely different experience to programming alone.

For example, I just spent a day building quite a powerful tool with Python. I must have only written about 10 lines of Python in the last 20 years, and I didn’t need to write a single line for this project. And yet, there it is; the tool; doing what I wanted it to do.

The LLM didn’t do it alone either. I didn’t just leave it with a single instruction and go home for the day — I guided it every step of the way.

Sometimes it did stupid stuff, like a student wet under the ears, but I told it so, and it did better. Occasionally it would take 5-6 attempts to get it to produce something that I was satisfied with.

But the net result is astonishing. There is no doubt. I would never have made a tool like this before the advent of LLMs.

Old way, new way

It is not that I couldn’t have figured it out on my own; there was nothing there that was beyond my intellect.

There were many practical details which I did not have knowledge of, but a few years back, with Google in hand, I could have slowly unpicked the problem. I think it would have taken me at least a week. Instead, I finished it in around 6 hours.

So though I could have built this tool before, it is very unlikely I would have. I would have needed a very good reason to spend a week doing it. As it is, the tool is quite frivolous, so I would never have even started.

Now I can build frivolous tools at will!

Changing industries

So it is going to change everything in my industry, and likely in many others. And that raises questions. To begin with, Do I still want to work in this “new” job?

I don’t know. It is exhilarating in some ways to tell something to do something, and then minutes later have it done, and work as envisaged.

On the other hand, it definitely feels less valuable. Like being the operator of a furniture making robot instead of a skilled carpenter. It’s a completely different job — you need the understanding of a carpenter, but not the skills.

Changing societies

But changing jobs is not what worries me the most. That has overcome many industries before (including furniture making carpentry!). It’s painful for the afflicted group, but people generally move on and find something else to do.

I don’t think it will be like that this time. For a start, there may not be much to move on to. AI will advance, and even if it is not “intelligent” in itself, it will be able to take over a lot of tasks.

Utopia

So we can all retire and enjoy great lives, right?!

I wish I was that optimistic.

Social media gave Trump the win in 2016, and it introduced the programmable society. Things have advanced quickly, and your opinion is now being constantly manipulated. Human beings have not evolved to handle this.

I’m worried for democracy. It’s a great system when people are left to make up their own minds, but democracy is fast becoming a programmable machine. People are too easily manipulated. We see it in the US, but equally in virtually every democratic society on Earth. The combination of AI with the syringe of social media is lethal.

(Ironically, less “free” countries like China may end up doing better, because if their leaders see the threat, they can quite easily enforce a new direction. In a democracy, bad players can hijack the state for their own benefit.)

Depressed

So I’m depressed. I’ve already lost friends over this: I just can’t talk to them anymore, the political divide has opened so wide.

Sooner or later I will have lost a job, and I’m not sure I want the one that comes next.

But most of all, we’re fast losing our freedom. I’m not even American, but I feel it from here in Europe. It feels like we have all lost.

Too late?

Can it be stopped? Part of me hopes people will just wake up to it. Some already have, leaving social media for Mastodon, or whatever.

But these tend to be a minority who grasp the situation, and the majority probably don’t have the insight to deal with it at this point. They are just drowning in the pace of change. Perhaps people will adapt in time. Let’s hope.

An AI Vaccine?

I also wonder if AI itself might not end up providing a remedy. The world has always been afflicted by new challenges, from malware to Corona virus. Human’s usually come up with the antidote.

Maybe the remedy here is software that is looking over your shoulder, seeing your screen, and warning you when it is manipulative. Software fighting software. A junk mail folder for the world.

Common Sense

I want to believe people can pull themselves out of this. I don’t want to live in a George Orwell novel. (How prescient was that guy?!)

As it is, I don’t trust our governments, and I don’t trust our companies. And my job keeps me awake at night.

I’m at loggerheads with myself.

How are things with you?

An LLM Version

I’ve been depressed. A lot. It started with Trump, but it’s not about Trump. It’s about watching democracy fail in slow motion. The cracks began in 2016—Russian hacking, the weaponization of social media, the realization that we’d built a machine that could be programmed to manipulate minds. Feed people lies. Feed them truth. Feed them what they love, what they hate. Measure their reactions. Optimize. Repeat. These were the early days of the machine, a crude prototype fueled by rage and algorithms.

Now, it’s nearly complete.

I’m not even worried about AGI—artificial general intelligence. That’s a fairy tale, or a nightmare, depending on who you ask. If it’s real, we’re probably doomed anyway. What scares me is the hybrid: the bionic brain. Humans working with AI, refining manipulation into an art form.

I’ve spent the last few months working almost exclusively with AI. And it’s left me hollow.

Here’s an example: I built a powerful Python tool recently. Not a single line of code came from me. The LLM didn’t do it alone, either. I guided it, step by step, like a teacher correcting a clueless student. It made stupid mistakes. I called them out. Five, six attempts later, it spit out something usable. The result? Astounding. A tool I’d never have built before. Not because I couldn’t understand it—there’s nothing here beyond my intellect. But the practical details? The time? A week of Googling, debugging, rage-quitting. I don’t have a week to waste on “frivolous” tools anymore.

This changes everything. Not just programming. Every industry.

Is this still my job? Sometimes it’s exhilarating—watching an idea materialize in minutes. But it feels… cheap. Like replacing a carpenter with a furniture-making robot. You need the mind of a craftsman, but not the hands. The value’s gone.

But displacement isn’t the worst of it. We’ve survived automation before. People adapt. This time? There might not be anything left to adapt to. AI doesn’t need consciousness to take over tasks. And while we’re arguing about UBI and early retirement, the real crisis is unfolding elsewhere.

Democracy is a machine now, too. Programmable.

Social media gave us Trump. It proved you could hack human minds at scale. Now, imagine that power refined by AI—opinions shaped in real time, fears and desires micro-targeted, truths and lies indistinguishable. We haven’t evolved to handle this. Neither has democracy. It relies on people making their own choices. What happens when those choices aren’t theirs anymore?

The U.S. is crumbling, but it’s not alone. Authoritarian regimes might fare better. China’s leaders can pivot overnight if they sense threat. In democracies? Bad actors hijack the system. And they have.

I’ve lost friends over this. The political divide isn’t just wide—it’s algorithmic. We’re living in different realities. I’ll probably lose my job next, or morph into something I don’t recognize. But more than anything, I’ve lost the illusion of freedom.

Can it be stopped? Maybe if people wake up. Some flee to Mastodon or shun social media entirely. But that’s a privileged few. The rest don’t have the tools, or the will. Maybe AI will save us—software fighting software, a spam filter for reality. Or maybe we’ll just… surrender.

I don’t trust governments. I don’t trust corporations. My job—this strange, soulless collaboration with machines—keeps me awake at night. Orwell saw it coming. I just never thought I’d live it.

How are things with you?